Introduction Last updated: 9th of March 2022

ARLO: Automated Reinforcement Learning Optimizer, is a Python library for automated reinforcement learning.

ARLO is built on top of MushroomRL which is a Python library for Reinforcement Learning.

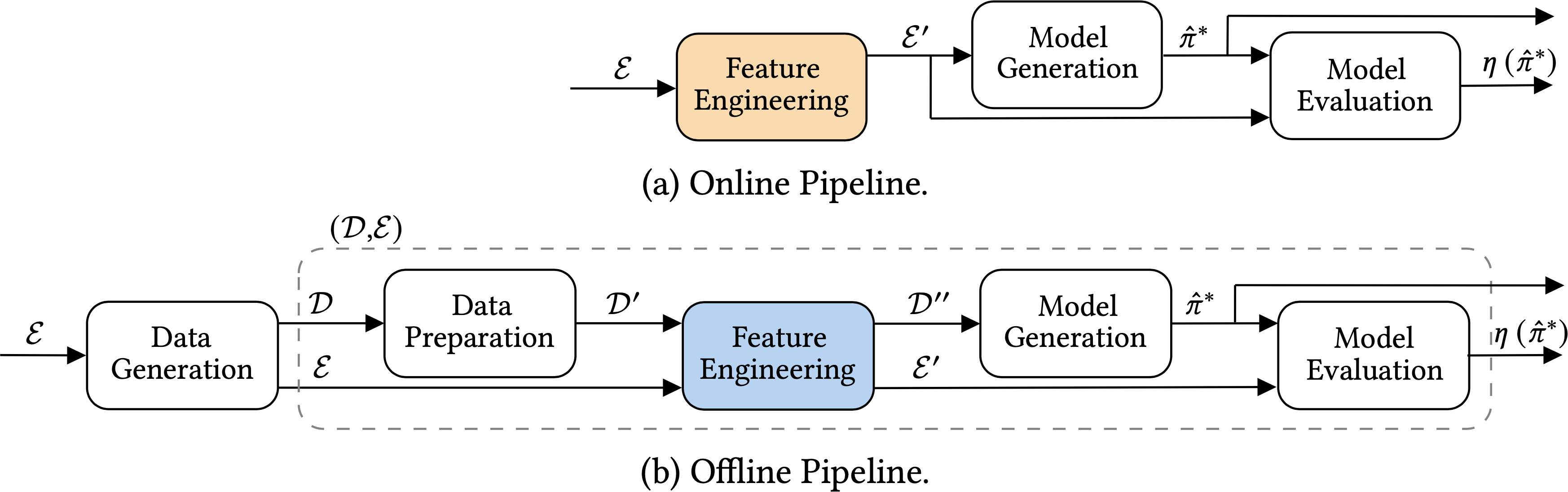

In ARLO the most general offline and online RL pipelines are the ones represented below:

In ARLO a pipeline is made up of stages and each stage can be run with a fixed set of hyper-paramters or it can be an automatic stage in which the hyper-paramters are tuned.Moreover it is also possible to have an automatic pipeline in which all the hyper-paramters of all the stages making up the pipeline are tuned.

Custom algorithms can be used in any stage for any purpose (algorithm, metric, tuner, environment, and so on, and so forth).

Other than the implementation of the ARLO framework and of specific stages, the library offers several other capabilities, such as saving and loading of objects, performance plots and the creation of heatmaps showcasing the impact of pairs of hyper-parameters on the peformance of the optimal configuration obtained in a Tunable Unit of an Automatic Unit.

These heatmaps can be created automatically, if specified, at the end of every Tunable Unit, with Plotly and are also interactive. An example can be seen here .